Day 2

Panel 1 - Bridging Models to Practice across Clinical and Biotech

"Sush" Raghunathan, data scientist. AI is used in Admin.+ Operational, Knowledge and Information Retrieveal, Analytical and Diagnostic tools, Decision and Reasoning.

There are three levels: they specify for AI deployment.

Tech Capability → Evidence, HFE, Monitoring

Bitterman et al, Approaching autonomy in medical artificial intelligence.

Risks are to patient privacy, quality of patient care, Regulatory.

Note an "Automation Bias" where you just implicitly trust something that's been automated without challenging it.

Elizabeth Lund operational pressure quote.

Human factors shape safe clinical adoption

Rosk-based governane and optimized eval balances innocation safety and impact.

Sujaya Srinivasan AWS - From silos t osolutions, federated AI in clinical and Biotech

Idea was acccess to data: siloed, sensitive, cannot be shared, limited ddata at a single site for a rare event. How do you collaboratively train AI models without sharing your data? A bit like a zero knowledge proof.

You train locally and only the weights are shared to update some global model.

AISB Consortium AlQureishi, Lilly TuneLab, Rhino Federated Computed, MelloDDY, OWKIN, for drug discovery.

Talk about Cancer AI Alliance: Cancer Centers got together and partrnereed with TEch (Google, MS, Deloitte) to accelerate research for rare cancers and gain insightssf from large and diverse patient populations and improve clinical decision suppport. They ise the RHINO platform. Early da, lots of promise.

Challenges: data garmonization, privacy preservation.

Roy Tal Dew, nVidia - Translation of Bio-Foundation Model, a Biomolecular perspective

Fortune magazine "The NExt Industrial Revolution"/ Forst wave of 80s and 90s - physicans -based, molecular dynamics methods that tried to predict molecular docking. The second wave was from 2000-2020s was custom machince learning, bespoke, assay-based models.

The third wave is biomolecular FMs. AlphaFold2 is ablbe to position 90% of proteins corectly! This is comparable to xray crystallography.

There are challenges: generalizability and accuracy. You'll need a lot more data. Ohysics based methods are used to create synthetic data.

Eroom's Law (lol). $2.5B, 10-15 years, 90% failure rate to produce a drug in the pharma industry! Can AI help here? You can certainly see successes in the preclinical domain. Chai Discovery. AlphaFold + RFDiffusion. You're in an era where youdon't just publish a paper on a model that works in-silico. That doesn't excite people anymore. You pubish broad experimental results/validation.

What about clinical relevance? Readouts: Phase 1 has a nice 80-90% success rate, better than averages of ~40%, Phase 2 has a rate of 40%, Phase 3 is TBD.

Carbon sequestration, plastic degradation, preventing plant disease... you can extend this drug discovery translation to solve these as well.

Kyunghun Cho, NYU, Genentech/Roche, Prescient Design, gRED

The process of drug discovery:

Science → Target discovery /validation → Molecule design/Dsiscovery → Molecule optimization/ characteriation → in-vivo validation → Clinical studies → clinics

How close are we to AI-driven drug discovery? Very far! There's this slice of molecular design/discovery + a little of molecule/optimization. The idea is not to speed up the process but improve the success rate. Slow and steady. This is where FMs come in. You can finally have somethign that makes connections, however small, across all the stages, + clinical trials, literature, etc. Predict forward, backpropagate... backwards.

- "Organic buy-in" for adoption.

- PRivacy in federated learning? Very hard to convince IRBs about this stuff.

- nVidia - RAPIDS Data Science framework.

- Patient-centeredness and Wholistic Pictures are a big theme here.

Panel 2 - Human AI Alignment: Addressing robustness, fairness, explainability, and compliance in building responsible and trustworthy AI

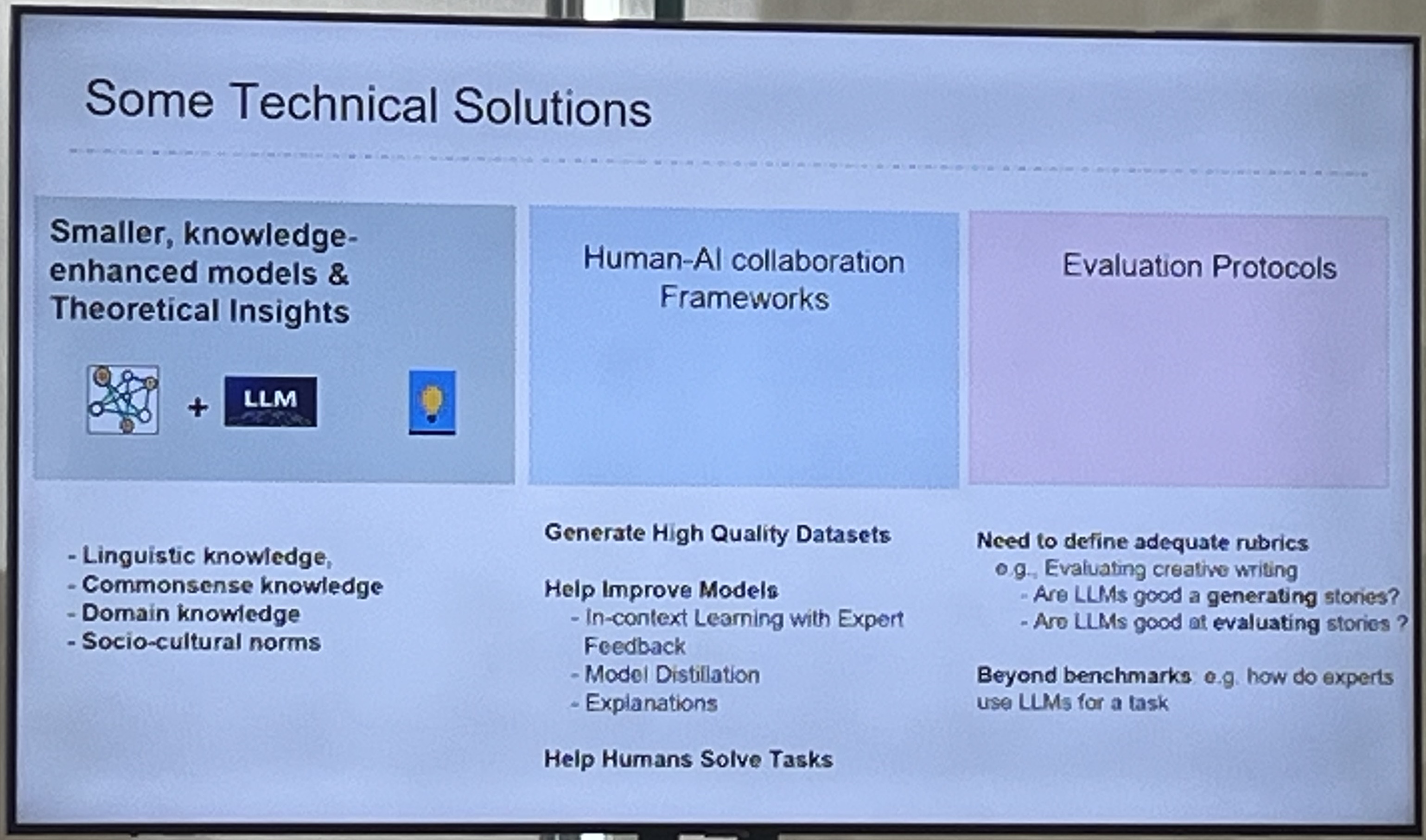

Smaranda Muresan, Barnard - Human-centric NLP for Social Good

- Sample focus: predict opioid overdose from investigation reports.

- Sample focus: Cross-cultural norms, discovery and understanding.

Challenges are the usual: high-quality datasets, EVAL!

Multilingual Multimodal Social Norms → Model → Fine-grained Human eval → Loop

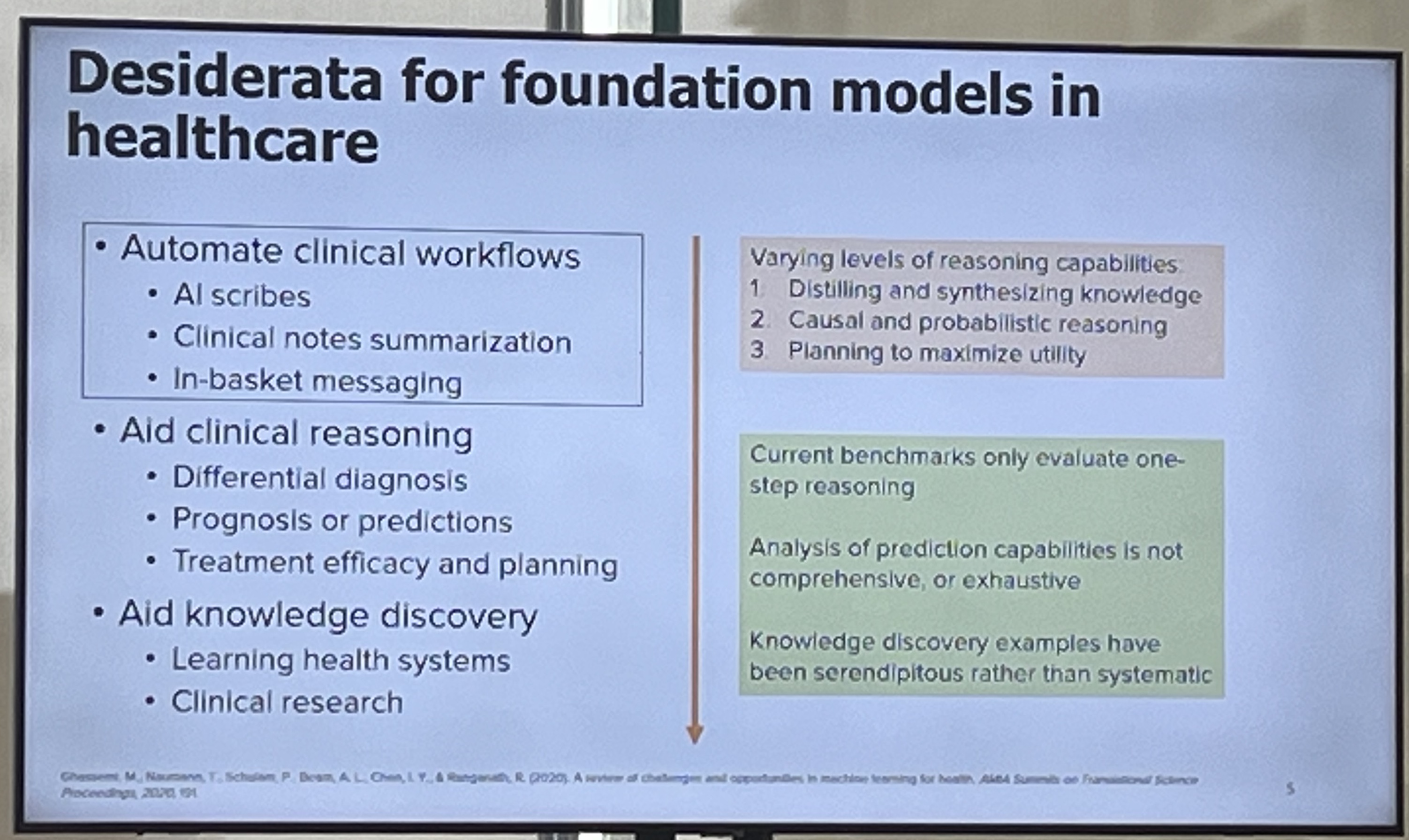

Shalmali Joshi, DBMI - Alignment Through capabilities in foundation models for health and medicine

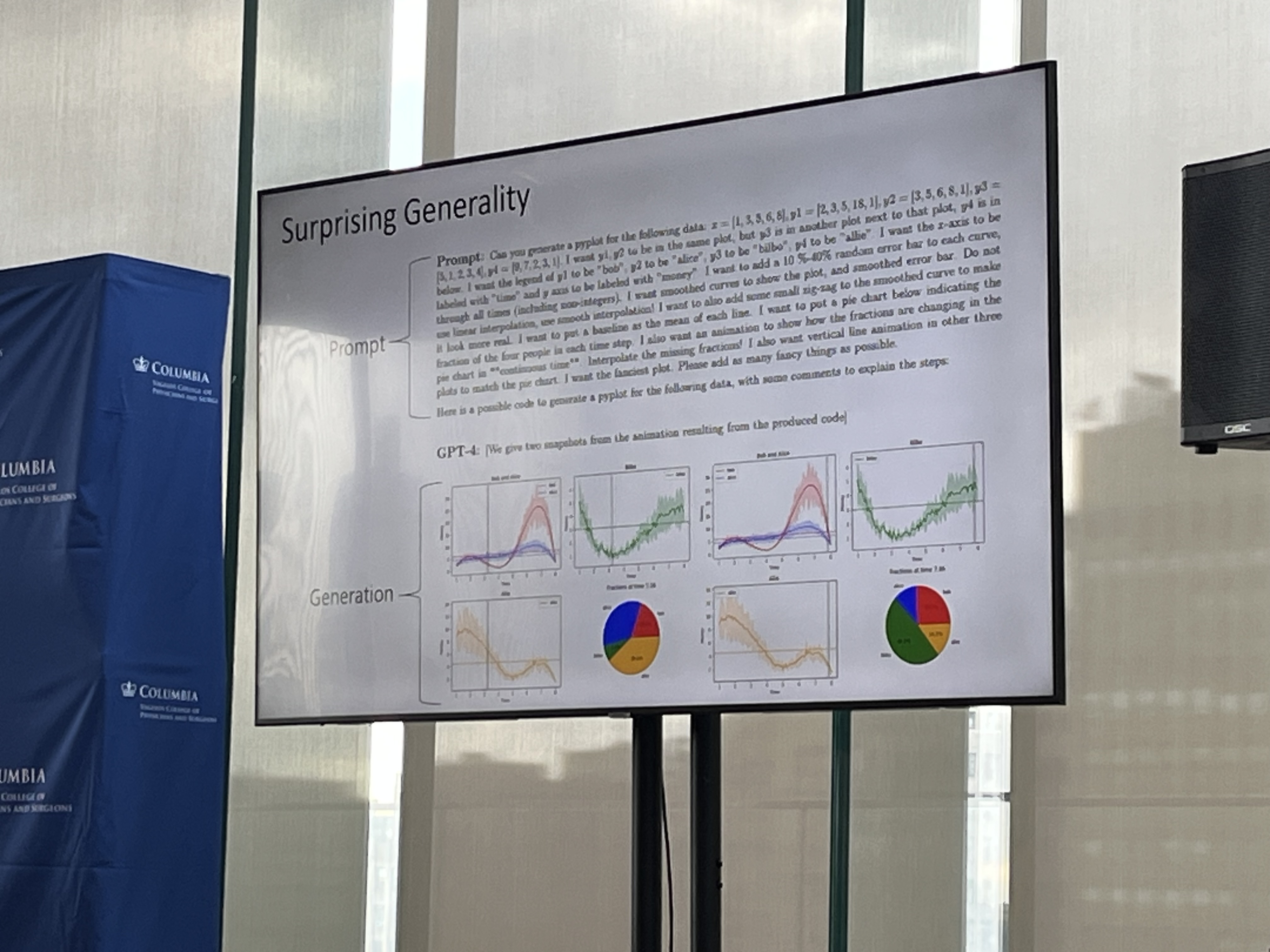

Major success in mathematical reasoning and coding because, "alignment" or checking for correctness is easier than conceptualizing proofs and code using verifiers with reinforcement learning.

Kinda exhausted corpora in the code-based reasoning models.

You need to quantiy the impact of statistical biases on AI behaviour. Develop AI that enables learning from feedback. Then Enhance AI sustems so they fail gracefully.

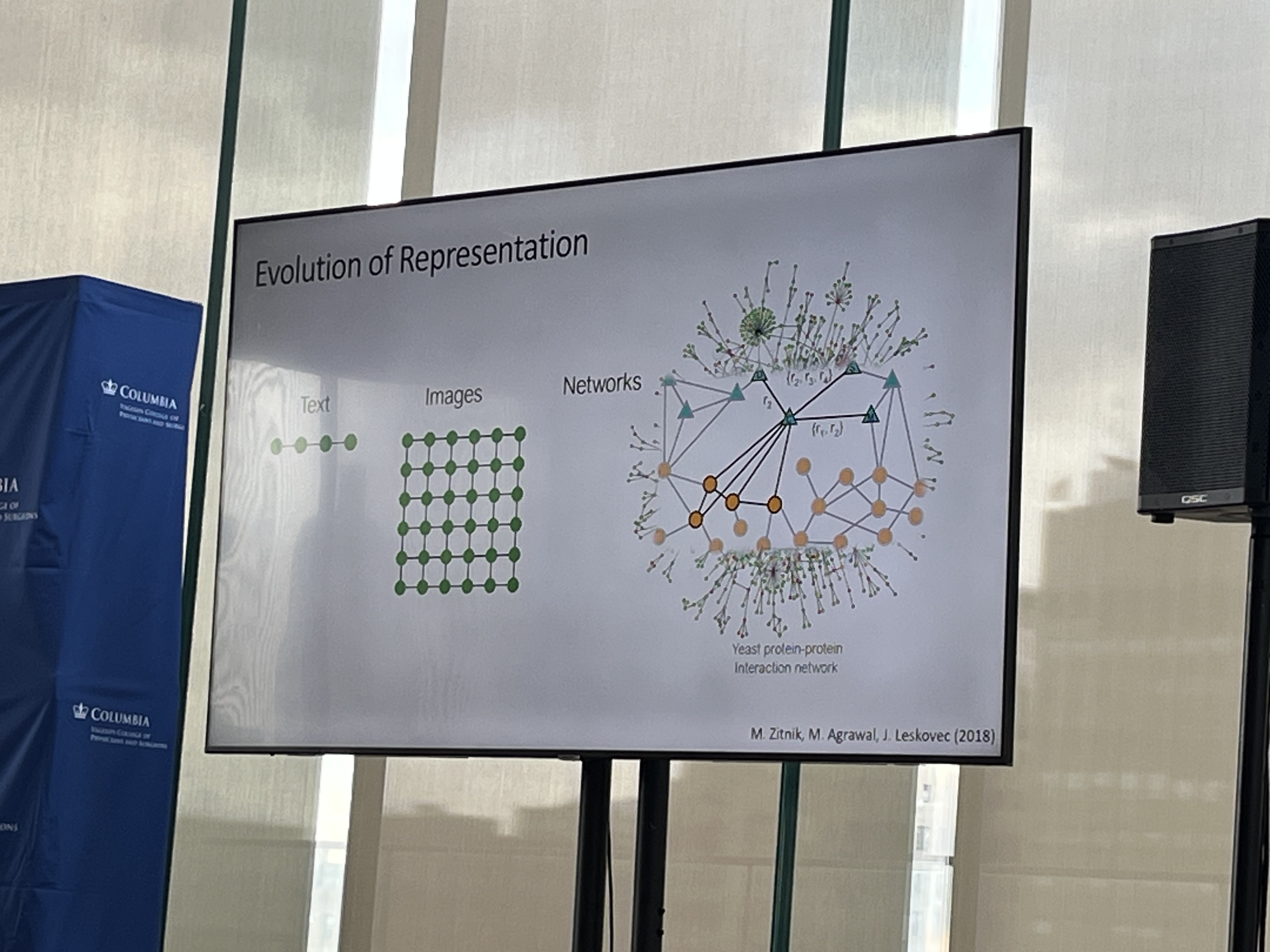

We went to FM because we thought it could solve conventional ML problems, big one being generalizability.

Desiderata:

When you benchmark you need to span a diversity of clinical settings (of course).

LLMs cannot "reason" accurately over missing data. When something is not measured in patient data, it is (or may be) informative! There's a reason why it wasn't measured.

Unfairness: Pulse Oximeter readings are affected by skin color. Scale in other domains won't necessarily map to healthcare. The challenges are harder.

Gamze Gursoy, DBMI - Human/AI Alignment - Patient Privacy

Large models are data hungry but biomedical data is stuck in silos, primary due to patient privacy concerns.

Researchers have demonstrated that genomic data cannot be anonymized! She even showed how single-cell count matrices can cause private information leakage. In clinical data, dataset overlap breaks anonymity!

But you gotta share data to deliver Shareholder Value™. How do you accomplish this? Lots of work in CS (e.g. homomorphic encryption, zero-knowledge proofs, etc)

Privacy problems are not specific to FMs but harder to quantify in them. What's even harder is how to protect the data while being able to use the models. Do people know how their data is being used in the FM? Does the modeler even know?

Model sycophancy is a concern. Cognitive offloading is a concern. Resource-intensiveness is a concern (nuclear reactors?).

"Fairness is inseparable from causality." Note that bias can exist on the other side as well! People might just want to study people like them.

Integer FitBit data of 5 days can identify you with 99.9% accuracy from a database of a million people! Our 'silent' patterns are as unique as us too!

In Privacy Research, you can tell the person "Listen, here are the risks, I am aware of them" to encourage participation. Reminded me of the Steve Jobs thing about privacy.

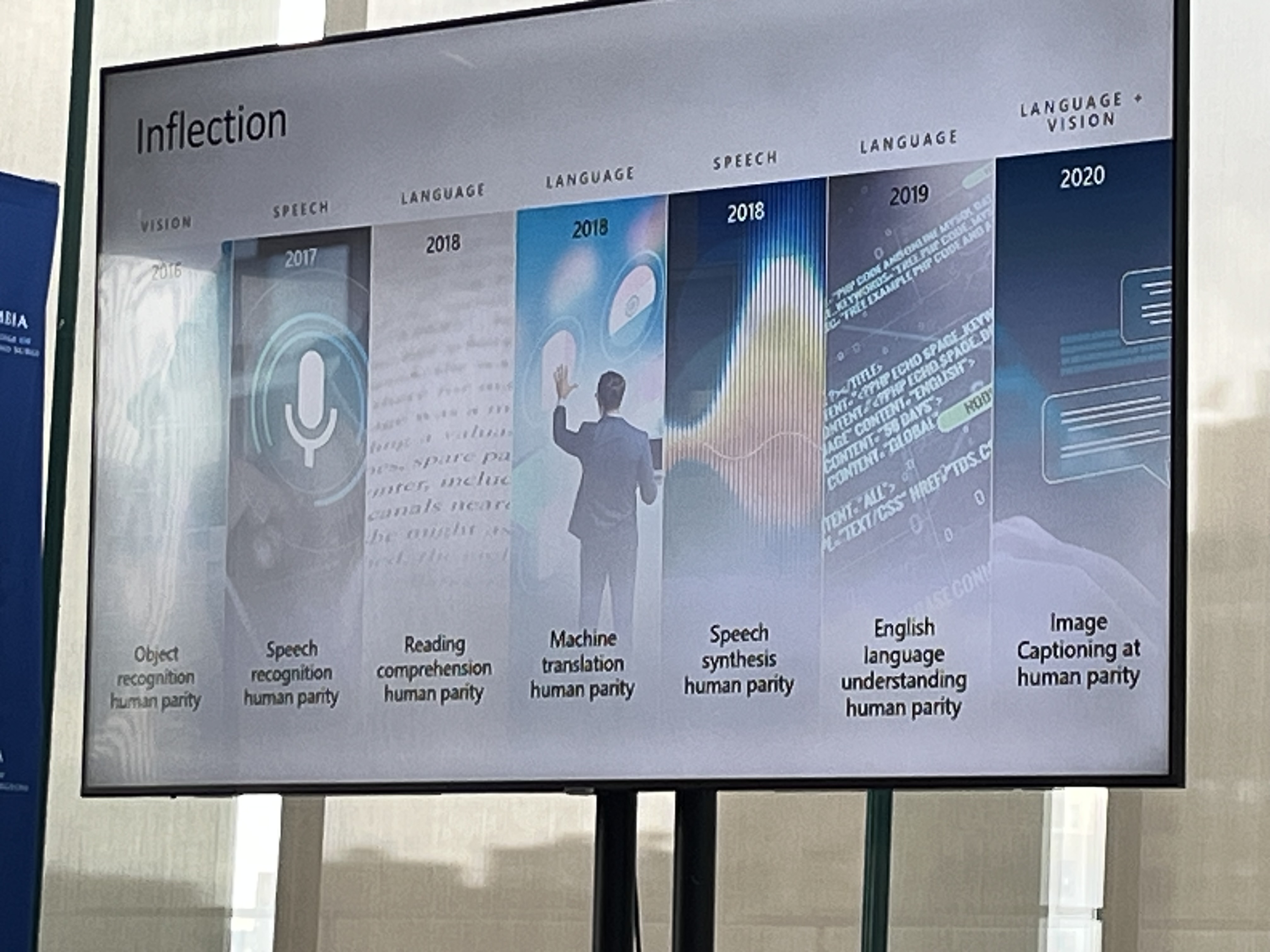

Eric Horvitz, CSO, Microsoft - FOundation Models at the Frontier, Power, Promise, Possibilities.

GPT4 was birthed near Des Moines, IA.

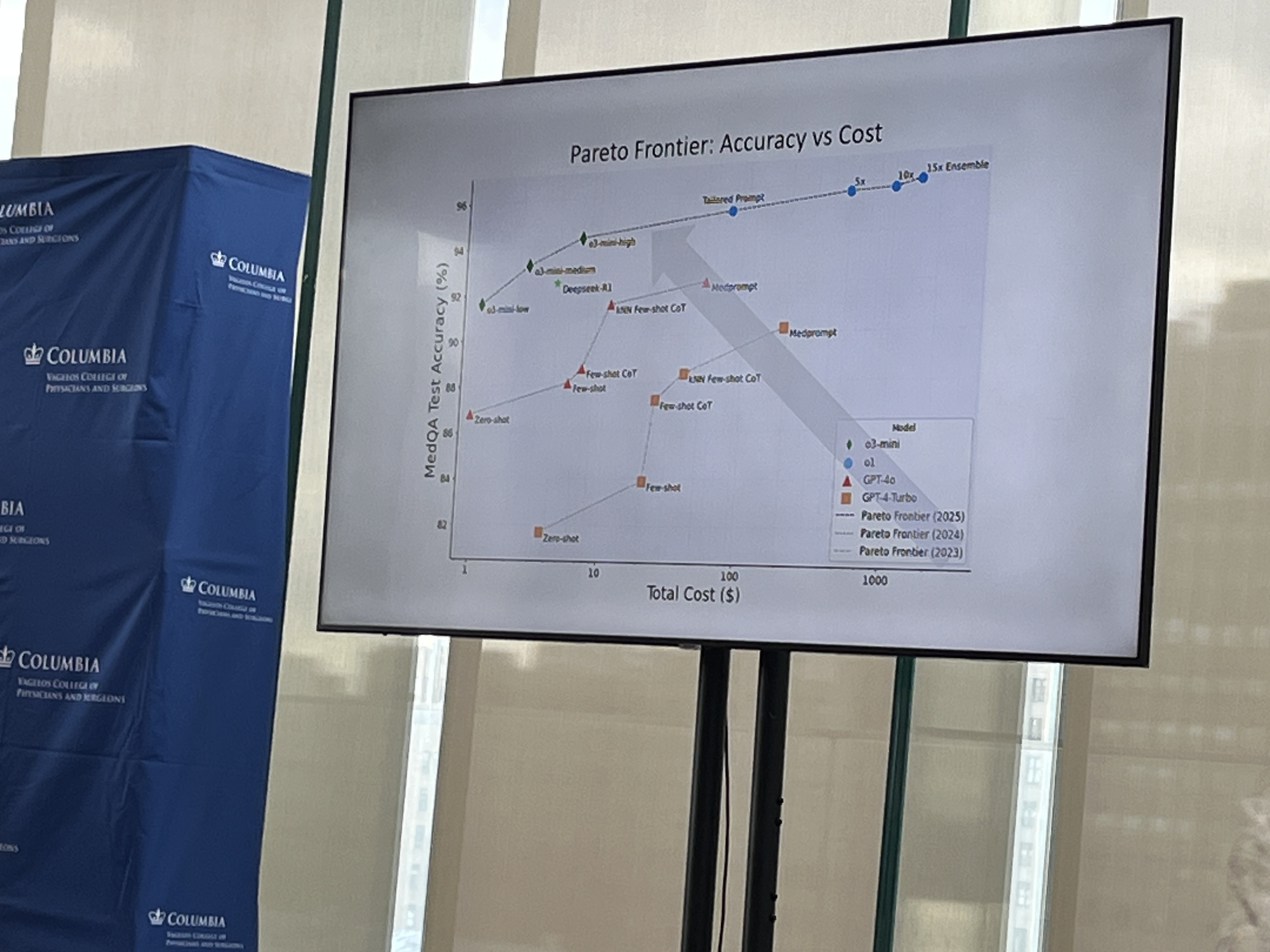

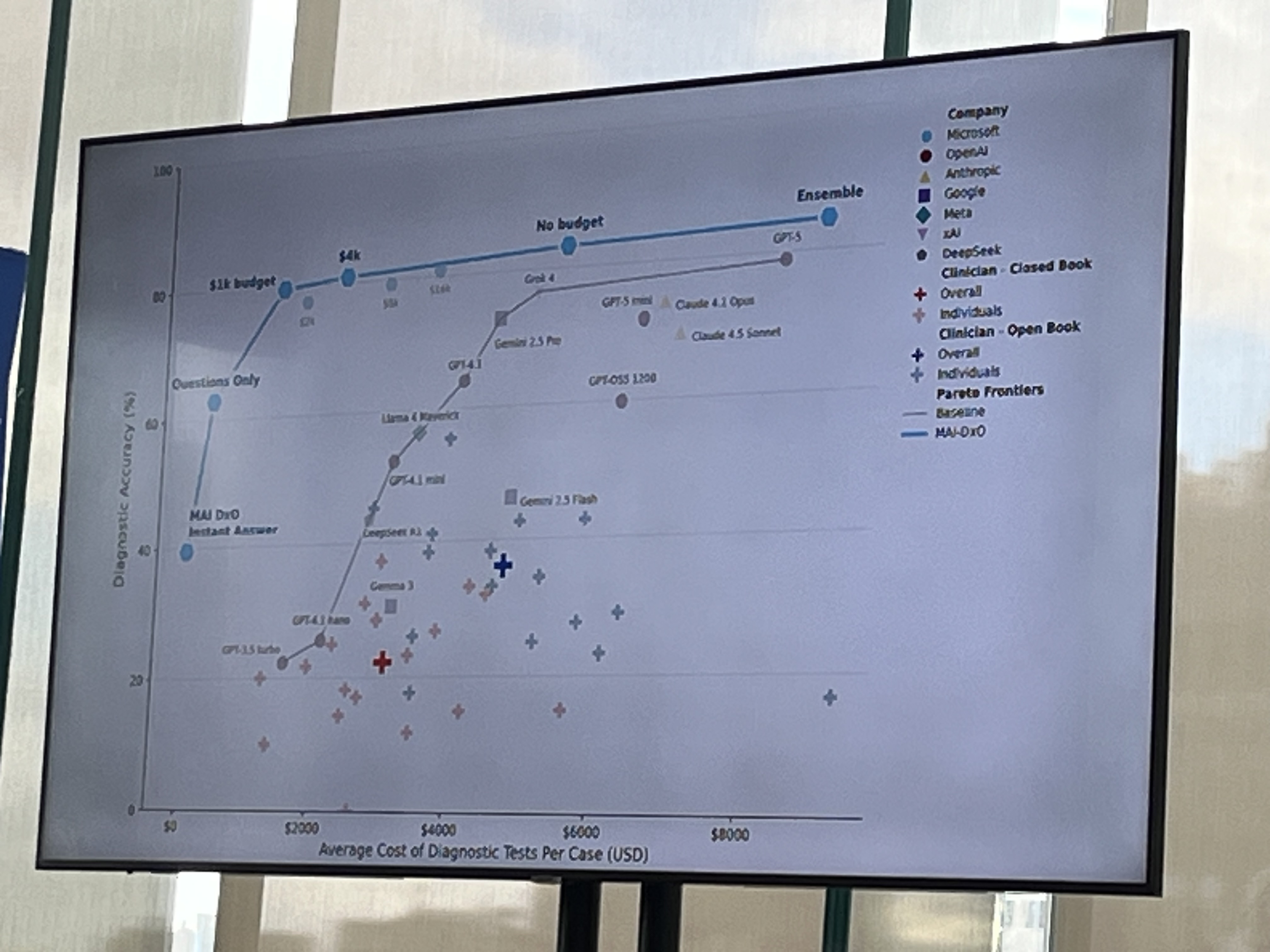

Pareto Frontier: Accuracy vs Cost.

Gigapath, BiomedParse

"Supercharging of Discovery across Temporal (y-axis) and Spatial (x-axis) Scales": Electrons → Atoms → Molecules → ... → Populations.

Atoms and Molecules: Can we do roomtemp superconductors? Solve Schrodinger? At Microsoft, they're building a Deep Learning Emulator to simulate molecules.

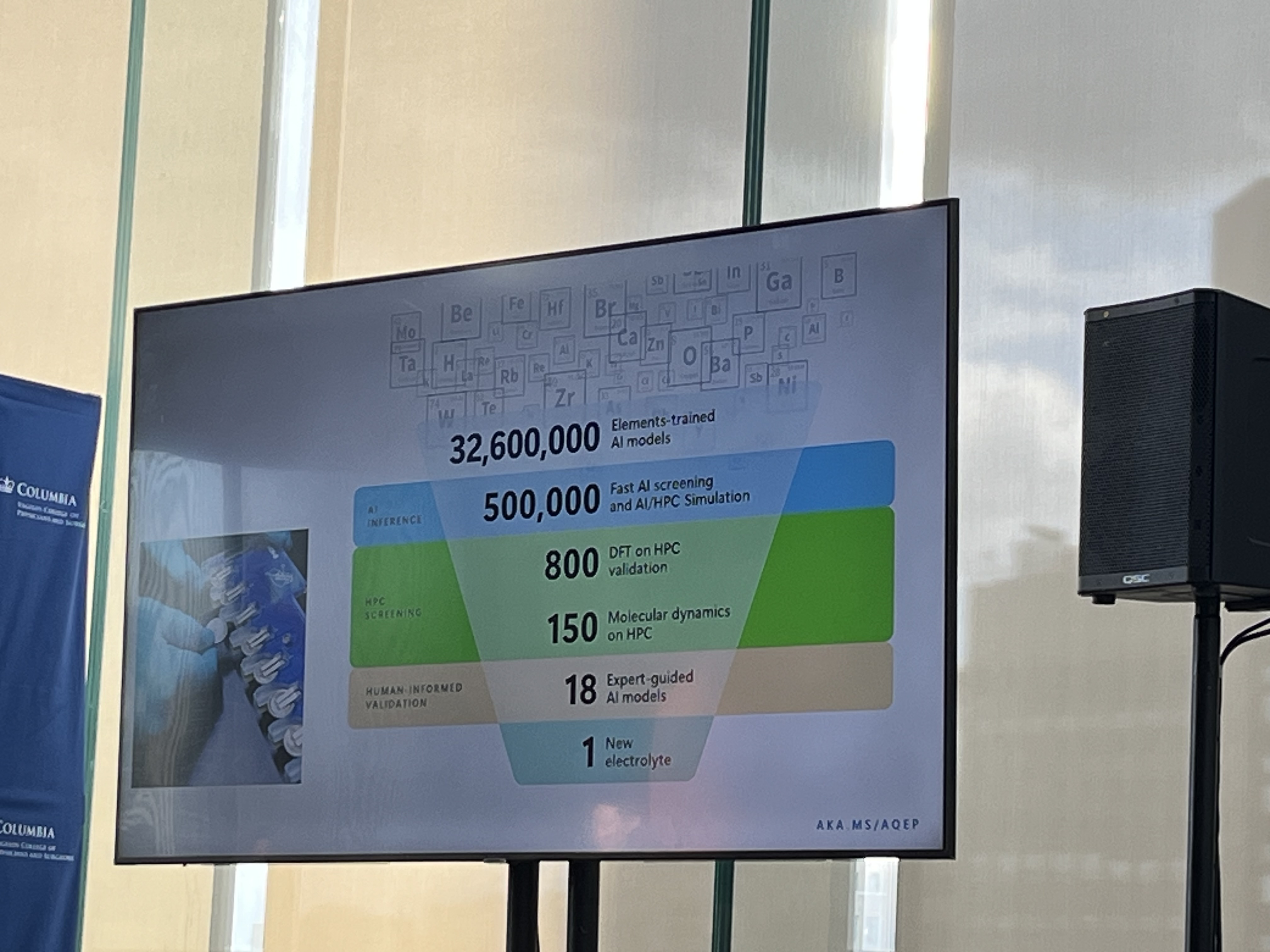

Microsoft and Batteries: AI tools generated 32M candidates → AI Screening on HPC - INSERT_PHOTO

The funnel is the same with new therapeutics.

Diffusion-based methods from image generation can solve problems in molecular design (think constraints).

Virtual Cell might be a federation of models.

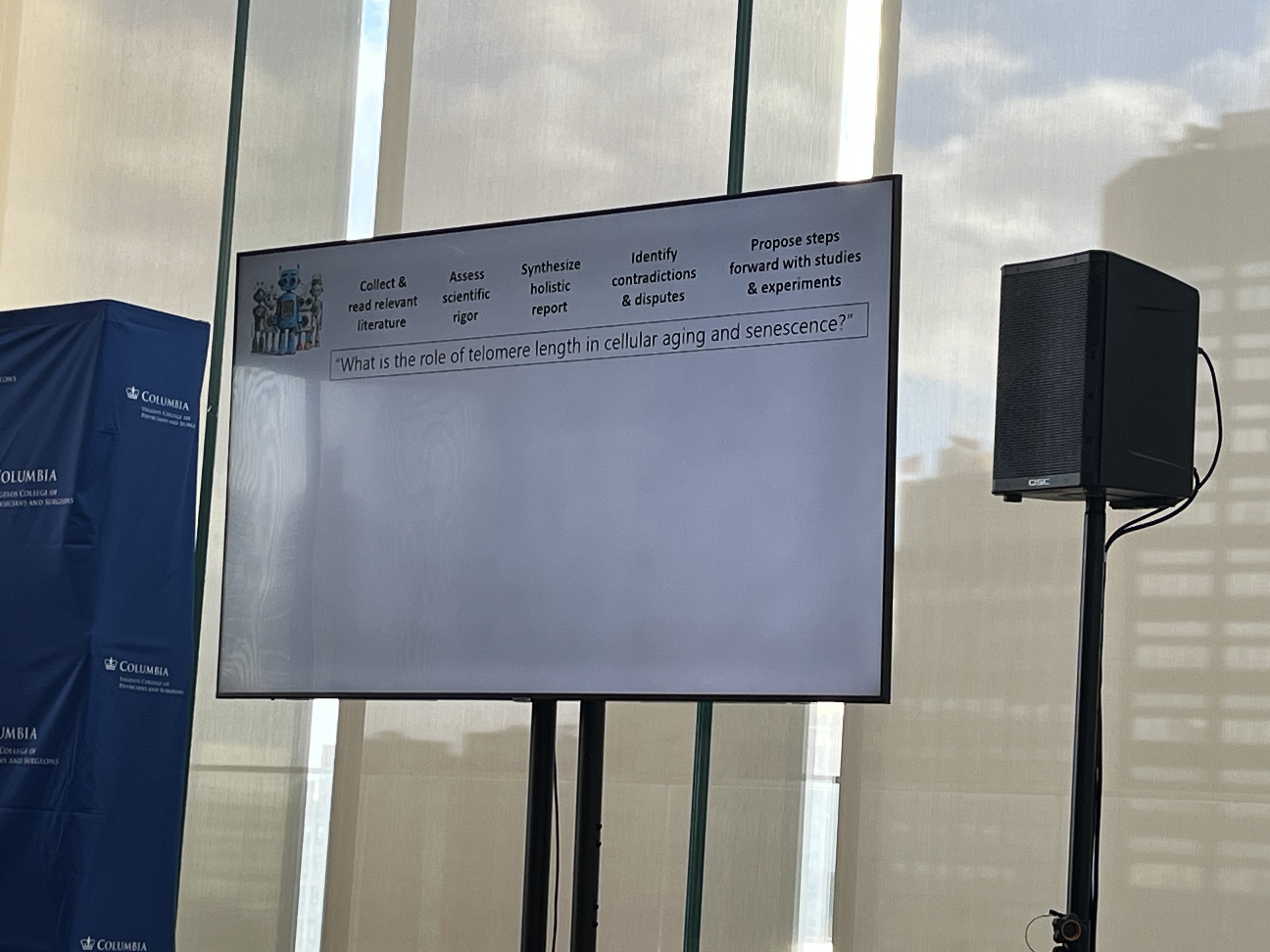

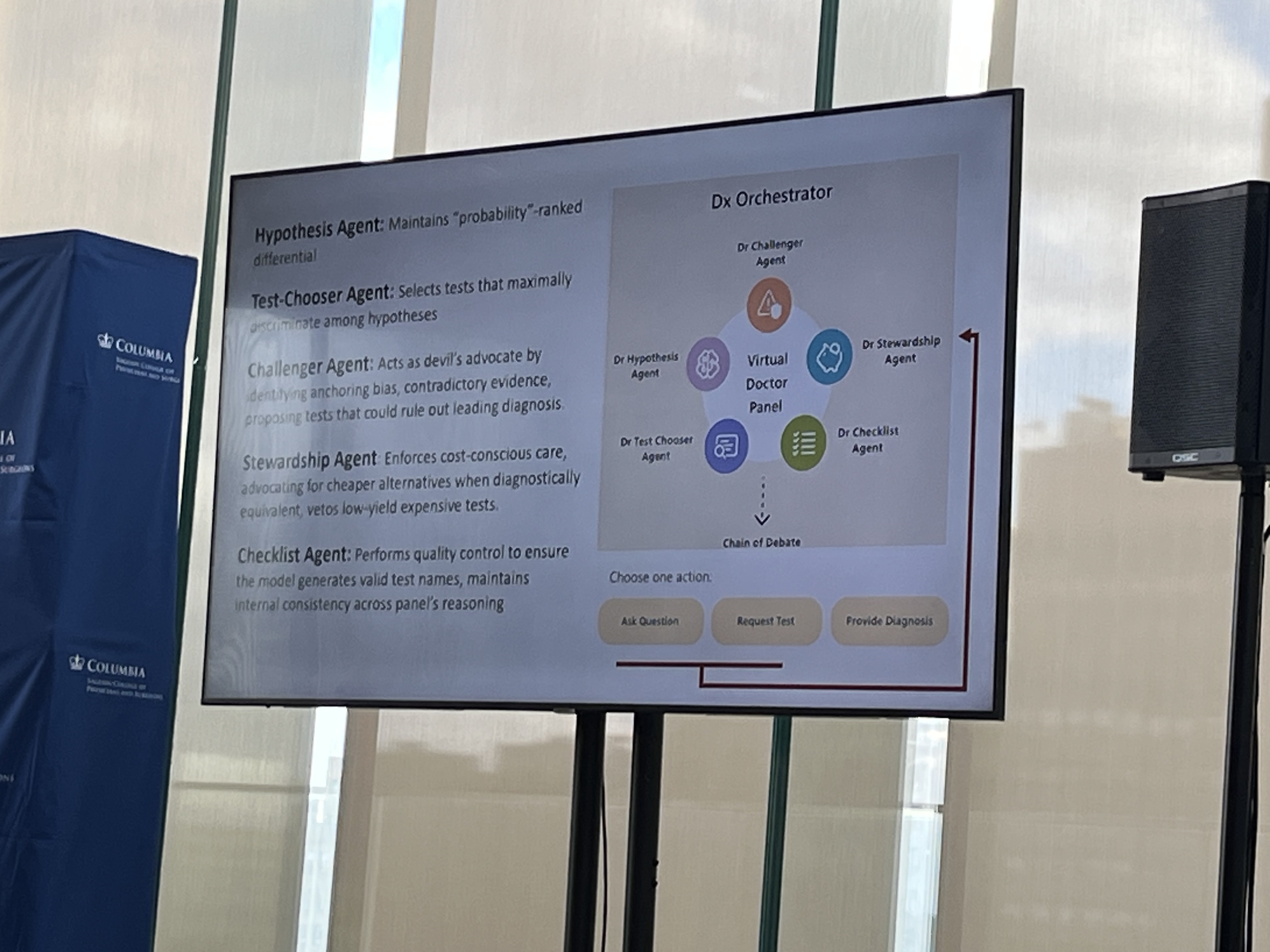

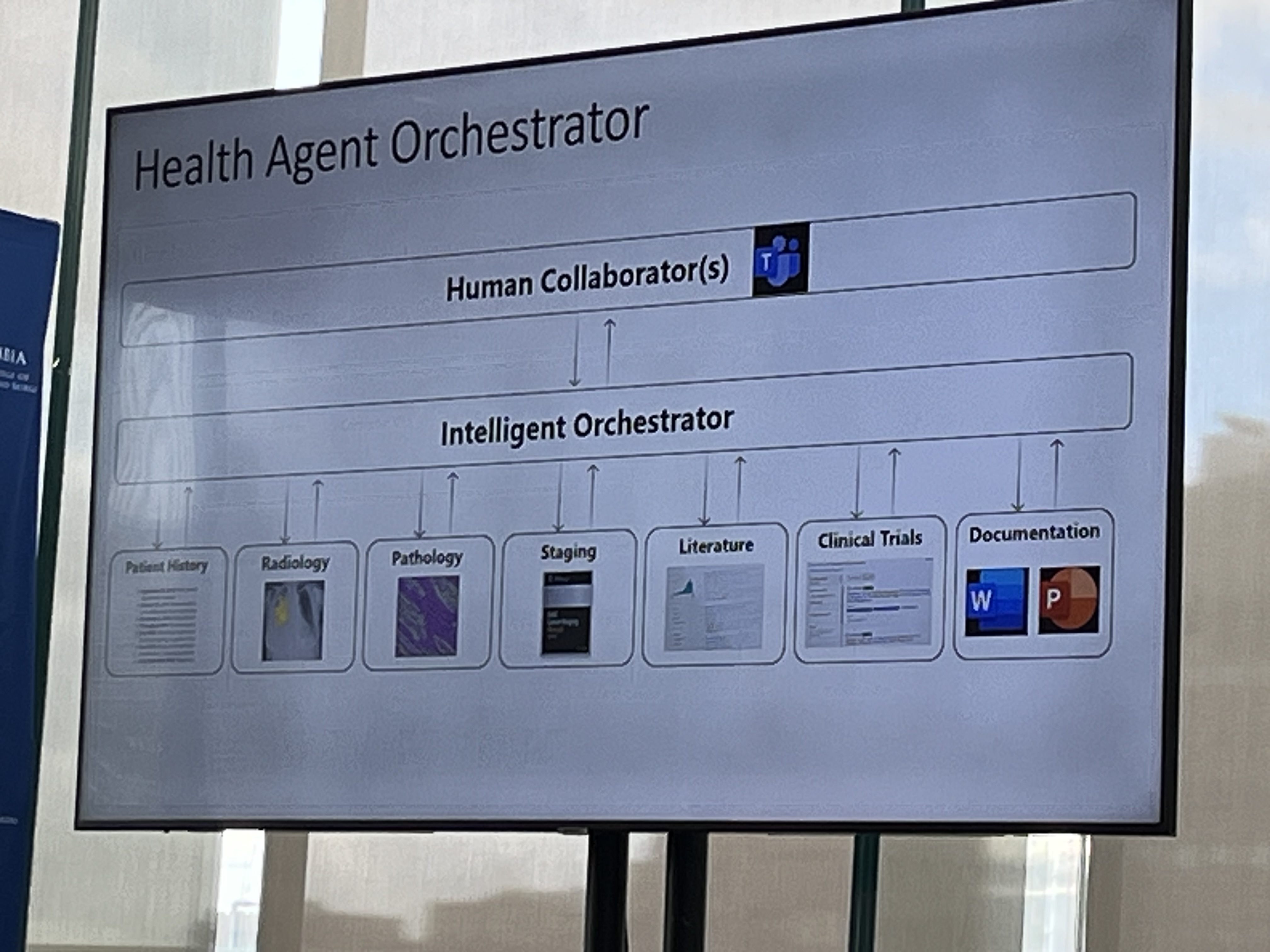

Agentic Systems: Example of Biomedical Research Assistant. IMAGE_HERE. Example of Agents in Diagnosis.